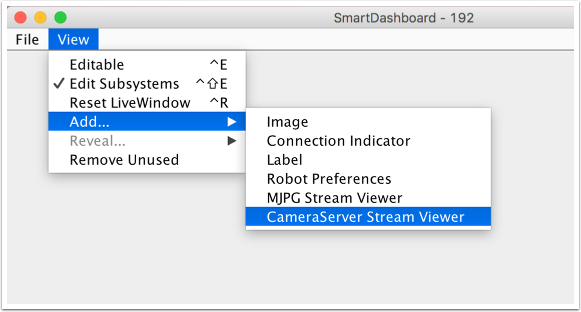

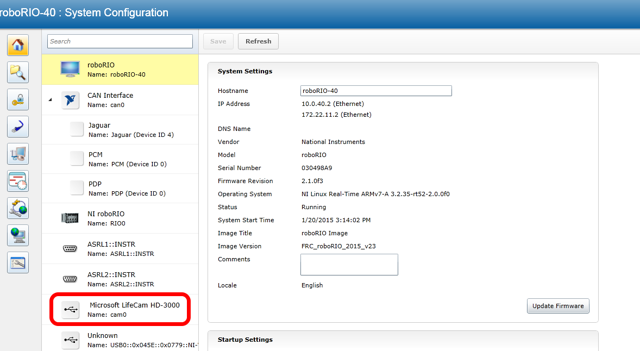

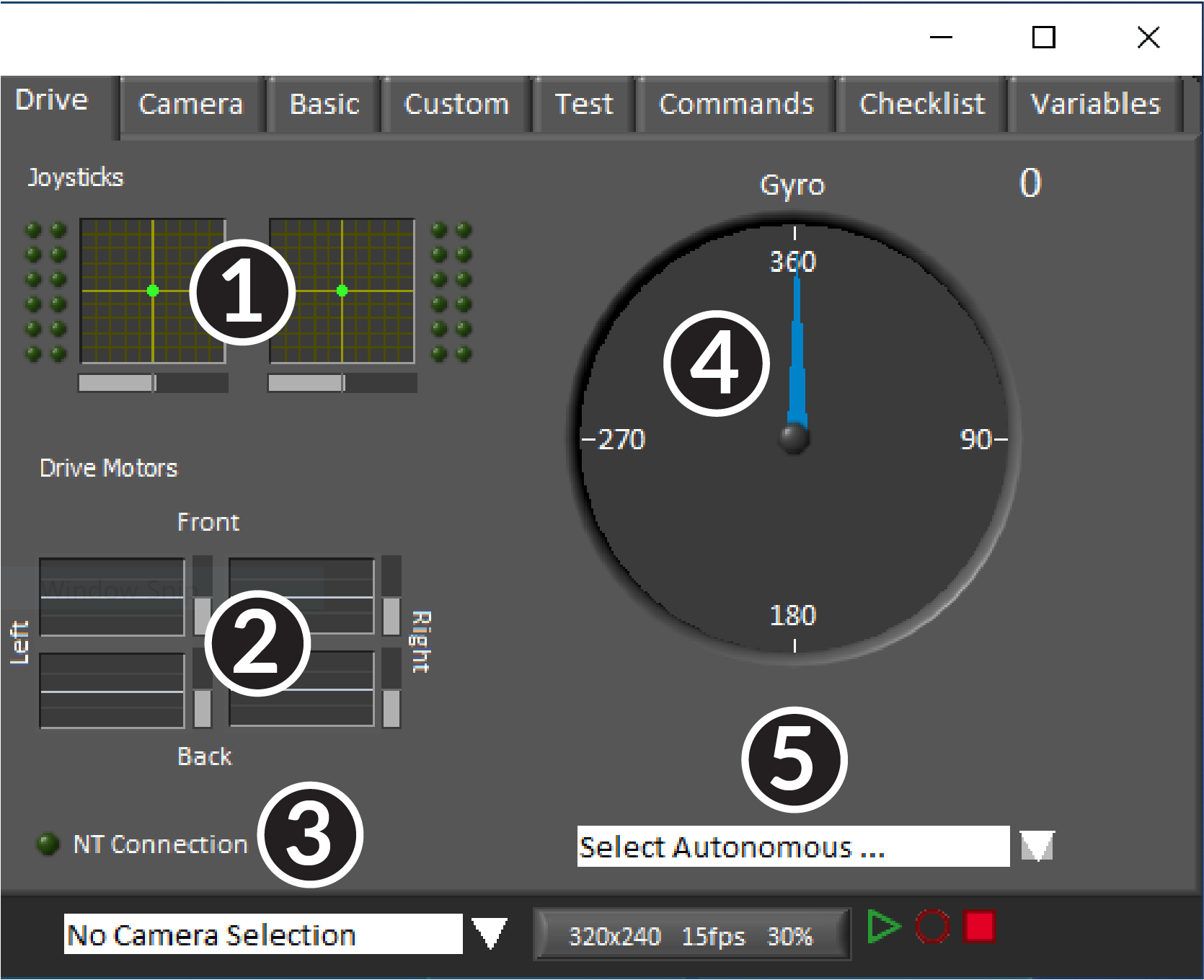

In the new setup, code has been added to support platform independent multi-threading so that it is even independent of the TRC framework. I didn't have time to back port it to the FTC repo yet. Also during the FRC season, the opencv vision support in TRC library has been streamed line. no need to load the cascading classifier xml etc). For using OpenCV with GRIP should be a lot simpler (e.g. Plus, the only reason I see right now for rolling my own FTC OpenCV library (backporting what Xtensible has, but that implementation is crude) is that I haven't seen a good self-contained library written for FTC, every other library I have found bundles their own version of the FTC SDK with them making it hard to use them in a modular is just playing around with OpenCV face detection. I feel like the exposed API should be much cleaner for what the OpMode is trying to accomplish, although in the process of "cleaning" the API the OpMode would no longer be self-contained. The code seems to be a little compacted in the file because although it is an OpMode, if you don't know how to setup FtcOpMode in TRC it is hard to understand the logic of, especially for the inexperinenced programmer because its usage of async programming styles. The specific example OpMode I found in mikets linked repo was In this folder, TrcVisionTask.java provides platform independent vision task support and TrcThread.java provides platform independent multi-threading support. This is the Smart Dashboard in FRC and it will be the Android phone's JavaCameraView layout in FTC. It also contains code to put a processed frame (with "annotation") to a display device. This is the one needs to be ported to FTC using Android camera instead. In this folder, FrcVisionTarget.java and FrcOpenCVDetector.java contain the code to deal with grabbing a frame from the FRC USB or Axis camera. calculate the distance/angle of the target). GripVision.java is a game specific filter that you have to write to sort through the potential false targets and calculate the physical info you need from the vision targets (e.g. In this folder, GripPipeline.java is the code generated by the GRIP vision tool. The only thing missing is a thin platform dependent layer which deals with the android phone camera of capturing the image. If you are interested, you can take a look at how our FRC code is using GRIP through our framework library which takes care of using a separate thread on vision processing. In another thread, I did comment on the possibility of using GRIP for FTC.

Our framework library is shared between FTC and FRC and it does support GRIP.

0 kommentar(er)

0 kommentar(er)